Authored by BresMed, now part of Lumanity

Therapies designed to target cancers with specific molecular signatures have reshaped the landscape of oncological drug development.1 As the next generation of tumour-agnostic therapies make their way through clinical trials, we should be asking ourselves what we can do to prepare for the upcoming challenge of reimbursement in this space, and what lessons can be learnt from the experience of existing therapies. Over the coming months, we will provide recommendations from our own experience and a review of the experiences of the tropomyosin receptor kinase (TRK) inhibitors (larotrectinib and entrectinib) on how to overcome the common challenges in this space in five short papers. Here, in the third of our five-paper series, we focus on the difficulties of estimating the comparative effectiveness of tumour-agnostic treatments.

Issues with estimating comparative effectiveness

To provide meaningful evidence of incremental effectiveness and cost-effectiveness for any medical intervention, two key issues relating to comparators must be addressed:

- What is the relevant comparator(s)?

- How do we define effectiveness relative to our treatment (the counterfactual)?

Things are more complicated when comparing interventions in a tumour-agnostic indication. For many tumour-agnostic clinical trials, within-trial control data are not collected. This means that the evaluation of cost-effectiveness also requires at least some of the following:

- The generation of a comparator arm

- The use of information from published literature

- Direct data collection of a historical or contemporary observational control

- In-trial analysis of earlier lines of treatment or non-responder data

Of course, targeting a tumour-agnostic indication does not preclude inclusion of a control arm. As noted by Stefan Lange, Deputy Director of the German Institute for Quality and Efficiency in Health Care (IQWiG), ‘basket studies can also be conducted with control groups’.2 Unfortunately, many planned trials in tumour-agnostic indications do not include a control group.3

Where a control arm is not included in a trial, generating an appropriate comparator data set is complicated by the need to cover multiple tumour types or histologies. Not only does this create challenges when generating an appropriate comparator data set, but it can also exacerbate the potential for confounding bias.4

A further issue with using external controls is that the target mutation may be prognostic in some or all tumours, making it difficult to obtain data limited only to patients that harbour the target mutation. This is particularly problematic where the mutation is rare or has not been targeted by treatments in the past. It is also important to understand whether any association with prognosis is independent of other factors that may be associated with the mutation, such as increased age or reduced performance status. For example, the impact of NTRK gene fusions on prognosis remains unclear.5-8

Determining the relevant comparison

When considering the relevant comparator(s) within a tumour-agnostic context, the first question to ask ourselves is: what is the decision problem we are trying to address? Will this consider:

- Optimal placement?

- Optimal pricing?

- Cost-effectiveness in the licensed indication?

To determine the optimal placement and pricing of a therapy, individual comparisons must be made by tumour type – and potentially even by individual comparator, and by line of treatment within each tumour type. An assessment of cost-effectiveness in the full licensed population necessitates a comparison with a blended comparator weighted according to the comparator use and patient characteristics expected in clinical practice.

For all of these, a good understanding of the comparator landscape across the geographies of interest for the multiple disease areas within the licence, as well as the evidence available to make comparisons, is essential to conducting a robust comparison of effectiveness.

Plan to generate your own comparator; the usual process for generating a single-trial comparison may not work

1: Conduct a systematic review to identify all relevant evidence

Conducting a gold-standard systematic review across all licensed indications is unlikely to be feasible due to the sheer volume of hits for broader indication-specific searches and a lack of evidence specific to the biomarker of interest.

2: Select method(s) of indirect comparison

- Naïve comparison

- Benchmarking with historical controls

- Regression

- Propensity scoring

- Simulated treatment comparison

- Matching-adjusted indirect comparison

- Comparison to self-controls

- Comparison to non-responders

3: Control for bias

Considerable challenges lead to likely residual confounding:

- Multiplicity of data sources: patient characteristic reporting is likely to differ

- Unlikely that prognostic characteristics will act in the same way across tumour types

- Sample sizes per tumour type likely to be too small

4: Scenario testing

Given the challenges associated with making a comparison, different scenarios may lead to wildly different results. Whilst multiple possible approaches should be tested and presented, it may be necessary to provide priors for the plausible range of estimates via techniques such as quantitative expert elicitation.

Due to the sheer number of tumour types involved, it is unlikely to be possible to conduct comparisons in a tumour-agnostic indication using the standard methodology of evidence identification by a broad systematic review. Where a new biomarker is involved, narrowing to only studies involving that biomarker will provide a more relevant evidence base for comparison. However, there are likely to be gaps in the data available, particularly when screening for the biomarker is not yet part of current practice. Given that most tumour-agnostic trials are single arm, this poses a real challenge in establishing the level – rather than just the existence – of incremental effectiveness.

In the appraisals of the TRK inhibitors larotrectinib and entrectinib conducted by the National Institute for Health and Care Excellence (NICE), searches for relevant evidence were limited only to previous NICE technology appraisals, with arbitrary assumptions made for tumour types where data were not found.9, 10 In the Swedish appraisal of entrectinib, data were taken either from a cross-tumour study of doxorubicin or from non-responders in the entrectinib trial.11 Neither of these approaches is ideal; the patients receiving the comparator therapy do not have the biomarker of interest, and the treatments being received in the trials may not represent current standard of care. Comparisons based on low-quality evidence like this will become less acceptable, as more therapies come to market and more guidance is released on the methods used to make higher-quality comparisons.

The interpretation of relative-effect estimates from single-arm studies compared with external controls is subject to bias, due to differences between patients selected as controls and those recruited to the single-arm studies.4, 12 Differences between the patient populations can arise in an appraisal for a variety of reasons, including differences in the following:

Geographical region – Treatment pathways, access and outcomes can vary considerably across countries.

Types of sites involved – Large academic centres versus smaller centres, for example.

Patient characteristics – Age, performance status, line of therapy or other prognostic factors.

Timing – Effectiveness of background care, treatment pathway, diagnostic accuracy, population health, and treatment-related factors such as dosing optimization can all vary over time.

The challenges of generating an appropriate control become particularly acute when considering a tumour-agnostic indication. This is due to the need to cover a range of tumour types, including the specific underlying histology associated with the licence, which is unlikely to have been captured in many studies. It is also unlikely that any single data set will provide sufficient coverage to represent the whole target population – nor is it likely that sufficient data will exist to conduct matching exercises in each tumour type.

A key factor in the reliability of effectiveness estimates based on external control data is the type of statistical analysis used. Many studies have sought to develop and evaluate methods for adjusting and eliminating bias that results from confounding. These methods include regression analysis, propensity scoring and population-adjusted indirect comparisons (matching-adjusted indirect comparisons and simulated treatment comparisons).13 While these could theoretically be applied, in practice the following data challenges are likely to prove insurmountable if manufacturers do not plan their own studies to provide a control arm:

- Large number of source data sets: Patient characteristics may be missing in some and reported differently in others

- Prognostic value of characteristics across tumour types: Questionable as to whether any required assumptions would be justifiable

- Small sample sizes: Likely to preclude analysis by tumour type and limit the ability to account for confounding biases

Despite these limitations, such methods would generally be preferable to a naïve comparison, which does not account for differences across groups.

Considering all of these complications leads to only two viable options for generating a robust control arm:

- Collect data for the control arm (i.e. create your own synthetic control arm); or

- Use trial data (i.e. non-responder data or prior-line-of-treatment data) to form an internal control

A synthetic control can be used to support both regulatory and reimbursement submissions. For example, in submissions to the European Medicines Agency (EMA) and to HTA bodies for the chimeric antigen receptor (CAR) T-cell therapy axicabtagene ciloleucel (axi-cel), patient-level data from the SCHOLAR-1 study were used to provide comparative-effectiveness evidence.14, 15 The EMA and the US Food and Drug Administration (FDA) are now accepting a number of regulatory submissions using a synthetic control arm as a secondary evidence source to support effectiveness claims. The FDA recently accepted a Phase III design combining patients in a synthetic control arm with randomized patients16-18, while the EMA accepted synthetic control arms generated from real world data in their recent assessments of alectinib and blinatumomab.19-21

Advancing real world data (RWD) into regulatory-quality real world evidence is a key strategic priority for the FDA.18

A major advantage of generating data to produce a synthetic control is the creation of patient-level data, which allows for more robust matching. Searching published literature is likely to provide only aggregate data. Such data are often insufficiently detailed to be able to conclude that there are no unmeasured confounders (a critical step in matching) – even in settings less complex than the tumour-agnostic space. Thus, an unanchored, unbiased comparison is often not possible with aggregate data.13

There is, however, no common understanding of the data relevance, reliability and transparency standards needed for the generation of synthetic control arm data. Given this, synthetic controls vary considerably in their usefulness. In the tumour-agnostic situation, such controls are likely to be most useful when:

![]() The control arm is designed with input from the relevant regulatory agencies and HTA bodies

The control arm is designed with input from the relevant regulatory agencies and HTA bodies

![]() The data collection and planned statistical analyses are pre-specified, and a clear process is followed to identify the best potential sources of evidence

The data collection and planned statistical analyses are pre-specified, and a clear process is followed to identify the best potential sources of evidence

![]() The control arm is created using RWD from similar sites to those used in the clinical trial, covering the countries of interest for later reimbursement activities

The control arm is created using RWD from similar sites to those used in the clinical trial, covering the countries of interest for later reimbursement activities

![]() The control arm is based on data collection for patients with the same biomarker identified via the same testing procedure as those enrolled into the clinical trials

The control arm is based on data collection for patients with the same biomarker identified via the same testing procedure as those enrolled into the clinical trials

![]() The control arm is based on data from as similar a time period as possible to the data collected in the clinical trial. In an end-of-line position, this factor may be less relevant if prognosis has remained stable over time

The control arm is based on data from as similar a time period as possible to the data collected in the clinical trial. In an end-of-line position, this factor may be less relevant if prognosis has remained stable over time

![]() The inclusion/exclusion criteria are as closely matched to the clinical trial as possible

The inclusion/exclusion criteria are as closely matched to the clinical trial as possible

![]() The tumour types of patients in the control are similar to those observed in patients in the intervention’s clinical trial

The tumour types of patients in the control are similar to those observed in patients in the intervention’s clinical trial

![]() The relevant prognostic covariates are collected to allow for the use of matching methods

The relevant prognostic covariates are collected to allow for the use of matching methods

![]() The data are of high quality (low levels of missingness, endpoints defined in a similar manner to how they were in the clinical trial, follow-up well conducted, etc.)

The data are of high quality (low levels of missingness, endpoints defined in a similar manner to how they were in the clinical trial, follow-up well conducted, etc.)

As with all comparisons using a synthetic control arm, there is a tension between matching control patient characteristics to the intervention’s clinical trial as closely as possible (internal validity; likely to generate a smaller but more robust relative treatment effect and be less generalizable) and matching expected real-world usage (external validity; likely to generate a larger but biased relative treatment effect and be more generalizable). This tension increases where the pool of patients in the intervention arm for a particular tumour is small, as recruited patients may not be fully representative of the eligible population. Clear guidance on which option is more appropriate is yet to be provided by regulatory or reimbursement agencies.

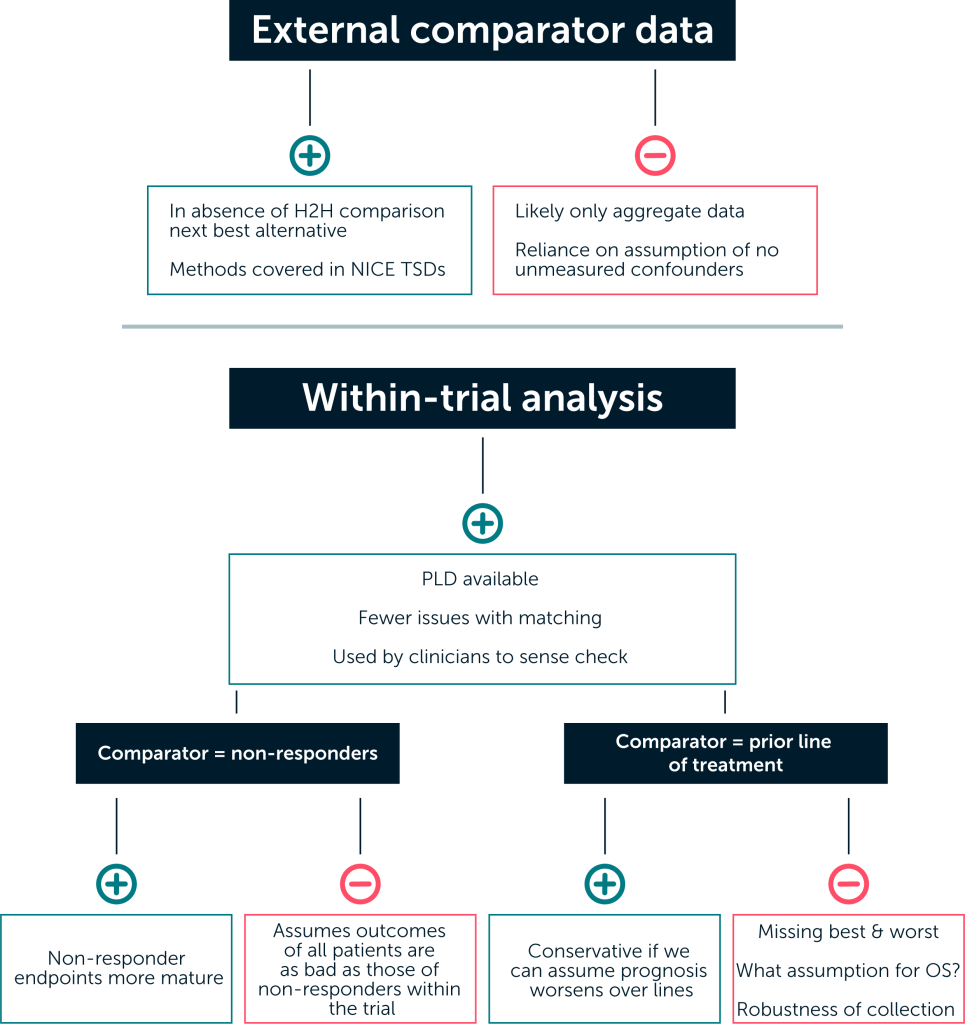

Explore scenarios using within-trial analysis

Even if external data are available (either through literature or generation of a synthetic control), because of the challenges of generating a truly comparable data set for the population receiving the comparator treatment, other approaches to generating these data should be considered and their limitations explored. Outlined below are two alternative methods in which patients in the single-arm trial are used to generate a control group.22, 23 Each of these approaches has pros and cons.

Pros and cons to different approaches to making a comparison

A likely issue for any approach will be data immaturity, leading to both uncertainty and the potential for implausible estimates. This was a major issue within the partitioned survival analysis submitted to NICE for the larotrectinib single technology appraisal in 2020.9

The key advantage of conducting a within-trial analysis (such as the use of non-responder or pri-or-line-of-treatment data) is that any issues around a lack of comparability between comparator and intervention data are avoided, a common challenge when comparing with an external da-taset. All information is drawn from the same trial, limiting issues with differences in inclu-sion/exclusion, patient characteristics, and outcomes measurement.

Other advantages of within-trial analyses are the availability of patient-level data and the familiari-ty of healthcare decision-makers, regulatory bodies and clinicians with such analyses. The use of non-responder data and the use of prior-line-of-treatment data both have precedent as sense checks often carried out by the clinical community when determining how the results from single-arm trials should impact on clinical practice. We focus on these two approaches below.

Using data from non-responders

This method involves the use of effectiveness data on non-responders as a proxy for patients not receiving an active treatment. Comparator effectiveness estimates of progression-free survival (PFS) and overall survival (OS) under this approach are therefore based on the observed PFS and OS of non-responders in the integrated efficacy analysis. The rationale behind this approach is that patients in whom no response is observed represent those with a lack of treatment effect (as they have no response to treatment) and are therefore representative of a counterfactual where no effective therapy exists. This patient population is also likely to be well matched with the intervention arm, as they are drawn from the same population.

However, this approach also requires strong assumptions, including:

- There are no differences other than response status between responders and non-responders that explain the survival outcomes (using baseline prognostic covariates in the analysis could help to mediate this); and

- Non-responders derive equivalent benefit to that received on current standard of care

The degree to which these assumptions can be justified will vary, given that the reliability of re-sponse as a surrogate is likely to differ across tumour types. The assumption that the outcomes for all patients receiving the comparator treatment are the same as those of the non-responders in the trial is a strong one; as a result, this type of analysis would likely provide an upper limit to the treatment effect.

Using data from each patient’s prior line of treatment

This approach uses data taken from the trial patients’ previous line of treatment to derive OS and PFS curves. In this approach, the inverse of the ratio between average time to progression (TTP) on their previous therapy and the mean extrapolated PFS with the active therapy (also called the growth modulation index multiplier, or GMI multiplier) is applied to health outcomes for the active therapy (PFS and OS; or just PFS with the assumption of equal post-progression survival).

This crude adjustment assumes a) that the active therapy is more effective than the comparator by the same proportion as the GMI multiplier; and b) that the ratio of TTP across lines of therapy is indicative of the treatment effect. It is uncertain to what degree either of these is likely to hold true.

The key limitations of the prior-line-of-treatment analysis are:

- The absence from the data set of patients with the best and worst outcomes. Those patients with very good outcomes may not progress, and those who died or are not fit enough for another line are also absent

- The requirement for disease history to have been robustly collected within the trial, which may not have been the case

- The need for assumptions around OS, a common one being that TTP gains would translate to equivalent OS gains

Should the choice of prior therapy influence survival on subsequent lines of therapy (either positively or negatively), the inference drawn from this approach could be misleading. This analysis can, however, be considered conservative if we can assume that prognosis worsens on each subsequent line of treatment.

Provide clear bounds for plausibility

In each of the two NICE appraisals for TRK inhibitors in tumour-agnostic indications, the use of within-trial analyses to support estimates from external data were viewed as useful by the Committee.9, 10 Multiple scenarios can be used to reduce the perception of uncertainty stemming from the lack of a comparative trial – particularly where these scenarios provide similar estimates of incremental effectiveness. In the same vein, robust expert elicitation methods can be used to elicit priors for expected relative effectiveness, which can be used to inform judgements around the plausibility of different scenarios or as a direct model input.24 Unfortunately, a lack of comparative data is not something that can be solved through further data collection, as clinical practice will be affected as soon as the new treatment comes to market.

Key points to consider

Conduct landscaping early on: A comparator landscaping exercise should be conducted as early as possible within the development process and cover existing standard of care as well as potential competitors within the tumour-agnostic space for the relevant biomarker.

Keep systematic searches narrow: Design systematic literature review search strategies to focus on multi-indication comparator trials and trials containing the relevant biomarker.

Include a control arm or plan to generate external control data:

- Engage early with regulatory agencies and payers – roughly 6 months before the planned initiation of the clinical trial is ideal

- Identify potential data sources systematically

- Pre-specify analyses

- Aim to collect relevant and reliable data

- Collect as much information as possible on potential prognostic characteristics

- Consider how differences in definitions between the trial and external data may affect the analyses – for example, progression information may be collected in a very different way in a real-world setting

- Collect information from patients with the biomarker of interest using the test specified within the clinical trial

- Consider the issue of internal versus external validity

Explore within-trial analyses as an approach for comparisons of single-arm trials:

- Collect data on prior lines of treatment to the clinical trial

- Explore non-responder analyses

Use scenarios to provide upper and lower bounds for the relative treatment effect: Using multiple methods for comparative analyses is recommended to reduce the perception of uncertainty and inform a plausible range of outcomes.

Use robust expert elicitation: To inform priors for long-term relative effectiveness.

Propagate uncertainty through your economic model: Uncertainty driven by different potential methods for making a comparison and statistical uncertainty within comparisons should be propagated through your economic analysis, just as you would any other source of uncertainty.

For information on how we can support your next tumour-agnostic therapy, please contact us.

Bold = techniques most frequently used in HTA

Synthetic control arm: Control arm created by aggregation of data from one or more sources outside of clinical trials; often used to reference using real world data from multiple sources to synthetically create a cohort of patients.

References

- Offin M, Liu D and Drilon A. Tumor-Agnostic Drug Development. American Society of Clinical Oncology Educational Book. 2018; (38):184-7.

- Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen (IQWiG). Larotrectinib in tumours with NTRK gene fusion: Data are not yet sufficient for derivation of an added benefit. 2020. (Updated: 15 January 2020) Available at: https://www.iqwig.de/en/presse/press-releases/press-releases-detailpage_9986.html. Accessed: 7 July 2021.

- Meyer EL, Mesenbrink P, Dunger-Baldauf C, et al. The Evolution of Master Protocol Clinical Trial Designs: A Systematic Literature Review. Clinical Therapeutics. 2020; 42(7):1330-60.

- Murphy P, Glynn D, Dias S, et al. Modelling approaches for histology-independent cancer drugs to inform NICE appraisals. 2020. (Updated: 28 February 2020) Available at: https://www.nice.org.uk/Media/Default/About/what-we-do/Research-and-development/histology-independent-HTA-report-1.docx. Accessed: 31 August 2021.

- Vokuhl C, Nourkami-Tutdibi N, Furtwängler R, et al. ETV6-NTRK3 in congenital mesoblastic nephroma: A report of the SIOP/GPOH nephroblastoma study. Pediatr Blood Cancer. 2018; 65(4).

- Canadian Agency for Drugs and Technologies in Health (CADTH). CADTH Reimbursement Recommendation (Draft): Larotrectinib (Vitrakvi). 2021. (Updated: 4 May 2021) Available at: https://www.cadth.ca/larotrectinib. Accessed: 31 August 2021.

- Bazhenova L, Lokker A, Snider J, et al. TRK Fusion Cancer: Patient Characteristics and Survival Analysis in the Real-World Setting. Targeted Oncology. 2021; 16(3):389-99.

- Pietrantonio F, Di Nicolantonio F, Schrock AB, et al. ALK, ROS1, and NTRK Rearrangements in Metastatic Colorectal Cancer. J Natl Cancer Inst. 2017; 109(12).

- National Institute for Health and Care Excellence (NICE). [TA630] Larotrectinib for treating NTRK fusion-positive solid tumours. 2020. (Updated: 27 May 2020) Available at: https://www.nice.org.uk/guidance/ta630. Accessed: 31 August 2021.

- National Institute for Health and Care Excellence (NICE). [TA644] Entrectinib for treating NTRK fusion-positive solid tumours. 2020. (Updated: 12 August 2020) Available at: https://www.nice.org.uk/guidance/ta644. Accessed: 31 August 2021.

- Judson I, Verweij J, Gelderblom H, et al. Doxorubicin alone versus intensified doxorubicin plus ifosfamide for first-line treatment of advanced or metastatic soft-tissue sarcoma: a randomised controlled phase 3 trial. Lancet Oncol. 2014; 15(4):415-23.

- Vellekoop H, Huygens S, Versteegh M, et al. Guidance for the Harmonisation and Improvement of Economic Evaluations of Personalised Medicine. PharmacoEconomics. 2021; 39(7):771-88.

- Phillippo D, Ades A, Dias S, et al. NICE DSU Technical Support Document 18: Methods for population-adjusted indirect comparisons in submissions to NICE. 2016. (Updated: December 2016) Available at: http://nicedsu.org.uk/technical-support-documents/population-adjusted-indirect-comparisons-maic-and-stc/. Accessed: 7 July 2021.

- European Medicines Agency (EMA). Yescarta: EPAR – Product Information. (Updated: 08 July 2021) Available at: https://www.ema.europa.eu/en/medicines/human/EPAR/yescarta. Accessed: 08 November 2021.

- National Institute for Health and Care Excellence (NICE). [TA559] Axicabtagene ciloleucel for treating diffuse large B-cell lymphoma and primary mediastinal large B-cell lymphoma after 2 or more systemic therapies. 2019. (Updated: 23 January 2019) Available at: https://www.nice.org.uk/guidance/ta559. Accessed: 7 July 2021.

- Spinner J. Medidata synthetic control arm lands FDA approval for cancer trial 2020. (Updated: 19 November 2020) Available at: https://www.outsourcing-pharma.com/Article/2020/11/19/Synthetic-control-arm-lands-FDA-approval-for-cancer-trial. Accessed: 7 July 2021.

- Beaulieu-Jones BK, Finlayson SG, Yuan W, et al. Examining the Use of Real-World Evidence in the Regulatory Process. Clin Pharmacol Ther. 2020; 107(4):843-52.

- Gottlieb S. Breaking Down Barriers Between Clinical Trials and Clinical Care: Incorporating Real World Evidence into Regulatory Decision Making. 2019. (Updated: 28 January 2019) Available at: https://www.fda.gov/news-events/speeches-fda-officials/breaking-down-barriers-between-clinical-trials-and-clinical-care-incorporating-real-world-evidence. Accessed: 7 July 2021.

- Davies J, Martinec M, Delmar P, et al. Comparative effectiveness from a single-arm trial and real-world data: alectinib versus ceritinib. Journal of Comparative Effectiveness Research. 2018; 7(9):855-65.

- European Medicines Agency (EMA). Alecensa: EPAR – Product Information. (Updated: 11 October 2021) Available at: https://www.ema.europa.eu/en/medicines/human/EPAR/alecensa. Accessed: 08 November 2021.

- European Medicines Agency (EMA). Blincyto: EPAR – Product Information. (Updated: 13 July 2021) Available at: https://www.ema.europa.eu/en/medicines/human/EPAR/blincyto. Accessed: 08 November 2021.

- Krebs MG, Blay JY, Le Tourneau C, et al. Intrapatient comparisons of efficacy in a single-arm trial of entrectinib in tumour-agnostic indications. ESMO Open. 2021; 6(2):100072.

- Hatswell AJ, Thompson GJ, Maroudas PA, et al. Estimating outcomes and cost effectiveness using a single-arm clinical trial: ofatumumab for double-refractory chronic lymphocytic leukemia. Cost Eff Resour Alloc. 2017; 15:8.

- Bojke L, Soares M, Claxton K, et al. Developing a reference protocol for structured expert elicitation in health-care decision-making: a mixed-methods study. Health technology assessment (Winchester, England). 2021; 25(37):1-124.